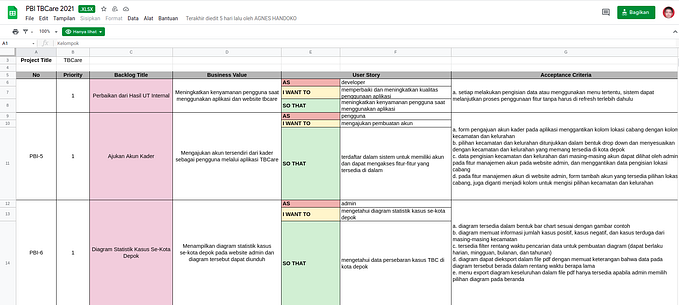

Visualizing Performance Testing and Monitoring Using Prometheus and Grafana

Performance testing is an important part in web development. Users tend to be extremely sensitive to site performance. Minor delays in request handling can lead to lost revenue, lost customers, lost jobs, you get the idea! So, it is important to ensure that a web application can handle the expected amount of traffic it will receive before deployment and after deployment. The former is generally done using performance testing, and the latter using performance monitoring. The difference between the two can be seen in this K6 article.

Doing a performance testing once is easy. Doing it on a regular basis however, can be a detriment to programmer’s productivity. With continuous deployment being the norm in web development nowadays, being able to automate performance testing and monitoring is preferable. This article will delve into how you can do this using several tools for metric ingestion and graph composing.

Background

Assume that we have a basic microservice based application, using NGINX as it’s load balancer. Users can access this app from our predetermined domain to be redirected by our NGINX proxy.

We wish to perform load testing into this architecture. There are several tasks to be done:

- Create a service that can send requests to our website (in staging area) and record several metrics (Response times, status code, response data length, etc.).

- Export our data into a metric ingestion tool, that will save and handle queries regarding our data.

- Write and draw the collected metrics into (several) meaningful graphs to be presented on the next sprint review. This will help determine whether out application is ready for deployment, requires some refactoring, or to be moved into a more capable (and expensive) hardware.

Creating a load testing template

There are several tools designed to make load testing easier. This article will use Locust, an open-source, Python based, easy-to-setup tool to perform load testing for our application. This article will assume that the readers have basic knowledge about Locust and Docker: How to run locustfiles, how to run a docker container (with or without docker-compose), and how to allow containers to communicate with each other

After creating our testing code, we will host this load testing tool using a Docker container designed to host locust process.

Note that the ideal Locust deployment is not to run it in a single instance (which is what we are doing here), but instead to run it in a multi instance, master-worker relationship architecture. However, for this demonstration, this should be enough.

Our locust instance should be up and running at http://localhost:8089. Swarm several users and let the test data flow, like below:

There is also several basic chars provided by locust that can be accessed using the Charts tab

These are some nice data representation. However, there are some weaknesses:

- They are not very flexible. For example, what if we want to know the response time of requests in the 90th percentile? What about the maximum response time of each response time in chart form? Or the number of workers if we are running multiple instance? What if a coworker asks these questions in the next meeting? We wouldn’t suggest to wait for the next meeting so a single chart can be composed, would we?

- Suppose that we want to combine our locust data with data from another tools (to calculate CPU usage and network bandwidth for example), then this locust website is not enough to visualize potential insights.

Clearly, there is a need to separate data collection tools and data processing tools. This is where Prometheus comes in.

Taken from their website:

Prometheus is an open-source systems monitoring and alerting toolkit originally built at SoundCloud. Since its inception in 2012, many companies and organizations have adopted Prometheus, and the project has a very active developer and user community. It is now a standalone open source project and maintained independently of any company.

Prometheus is made mainly for monitoring systems. But in reality, it can handle a variety of time based metrics, such as our locust metrics from before. Here is how it works: At a regular interval, Prometheus will gather (scrape) data from our metric generation tools and allow these data to be queried from any services that may need it.

Run a metric exporter for Prometheus

In order for Prometheus to recognize our data, we need to create an exporter: an instance that can gather our data and format it in the standardized form. Luckily, there are a variety of open-source exporters for just about any monitoring and performance testing tools, locust included. For the sake of this demonstration, this container from ContainerSolutions will be used.

ContainerSolutions requires us to specify the URL of our locust instance in the variable LOCUST_EXPORTER_URI. Let’s do exactly that, and create our composition again. Connect to http://localhost:9646/metrics and you will see something like this

These are the lists of metrics generated by our previous locust instance in a Prometheus-friendly format. Notice that all rows (except the commented ones) have a similar format: vector_name{vector_parameters} value. For example, the average content length of GET requests to path /cases/case-subjects is saved at

locust_requests_avg_content_length{method=”GET”,name=”/cases/case-subjects”} …Do note that the parameters are not fixed. It can be whatever we want and it may help us to query our parameter at Prometheus later on.

This instance is not the only way we can transfer locust data into Prometheus. We can even create our own exporter! The full rule for this can be seen here and is not part of this demonstration.

The /metrics path is where Prometheus received it’s data. This page will be accessed regularly at fixed interval. For each access, snapshots of the given data will be recorded to be used by other services.

Build a Prometheus instance to gather our data

Next, we should create a Prometheus instance to actually gather the data exported above. First, create a configuration file like this

The important lines here are line 24 and 30. Line 24 defines how long our instance should wait after taking each snapshot before getting another one. Line 30 lists the access point of our exporter. If there are multiple exporters, we want to list all of them here. The configuration above tells Prometheus to access http://locust-exporter:9646/metrics every 2 seconds to gather data.

Now, we can define our Prometheus instance

Update our docker configuration like above and connect to http://localhost:9090. It should display a page like below

Here, you can gather the generated metrics in any way you like. For example, try getting the average response time of GET for all paths:

You can also get the chart version of the data by accessing the Graph tab:

Looks pretty neat compared to the Locust charts version. This time however, we can generate our own custom graph with ease. Prometheus has solved the first weakness. However:

- This interface is still not very user friendly

- The current query cannot be saved (conveniently) for later use

- Mixing multiple labels in a single graph is still an issue

We can solve this using Grafana.

Composing and Visualizing Data using Grafana

Prometheus is very good at storing and manipulating metrics. On the other hand, Grafana is very good at displaying and visualizing data.

Simply add a new Docker instance for Grafana and access http://localhost:3000

Note the inclusion of a Docker volume. This is to persist the changes you might make on your Grafana instance. You can run Grafana without it. But your settings will be lost whenever the container is dropped.

Access and log in to your Grafana instance with username and password ‘admin’. You can create new users, new password, and manage permissions later.

Grafana can receive data from multiple data sources. To add a new Prometheus data source, go to Settings > Data Source. Pick Prometheus from the list. Give the new data source name “Prometheus” (without the quotes) and give the URL of your Prometheus instance. Below is an example:

Verify that the data source can be accessed by Grafana by clicking Save & Test. Now, it is time to create a new chart.

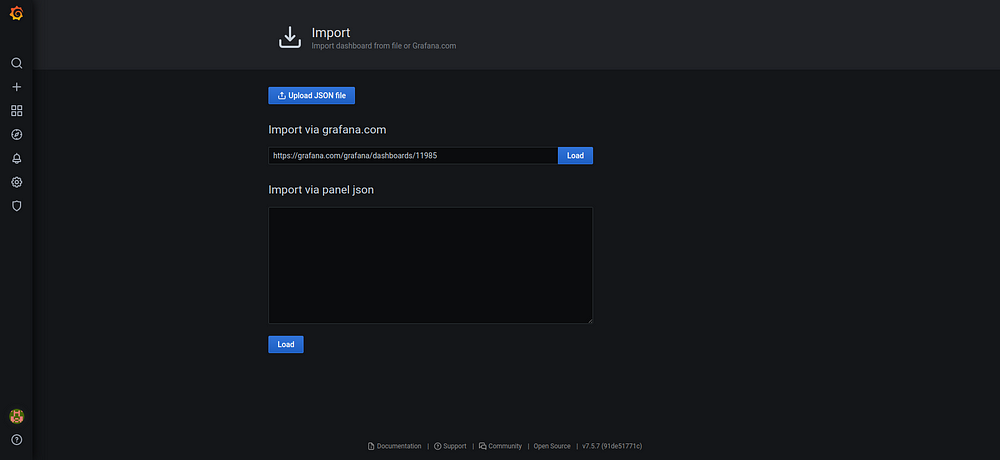

Grafana manages charts into dashboards. To create your first dashboard, you can navigate to Create > Dashboard. But for this demo, I’d suggest to use the existing dashboards created by the community and go to Create > Import. Fortunately for us, ContainerSolutions have created a default dashboard along with their exporter. On the Import via Grafana.com section, copy the dashboard URL (given at their README section in their GitHub repository here).

Click Load and then Import. Your dashboard will be available and displaying data immediately.

It looks much better, isn’t it? Note that in order for this dashboard to receive data from your Prometheus data source, your data source needs to be named “Prometheus” (without the quotes). If your dashboard displays “No Data”, you can either change the data source name, or edit each of the charts above to query from your data source.

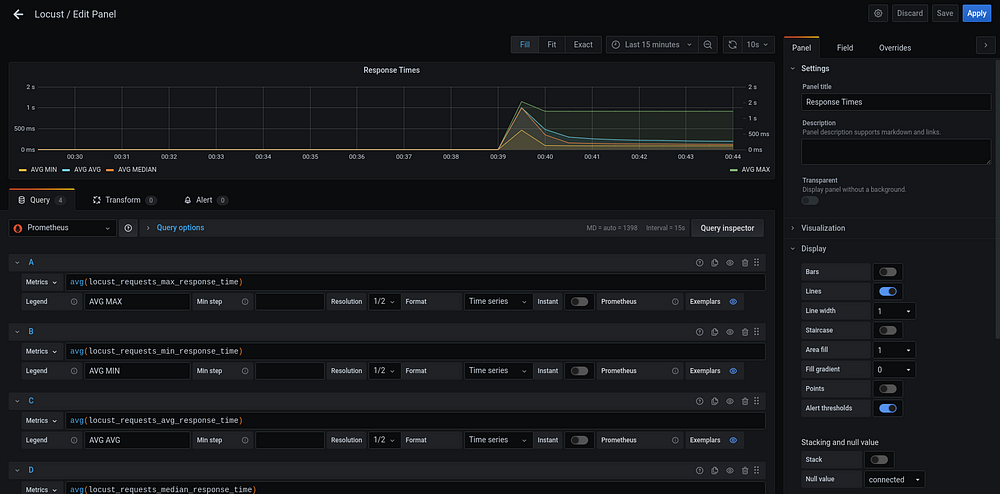

Let’s look at what happens behind the scenes. Pick the Response Times chart and click Edit.

Below the chart is a list of line configurations. Each row in this list corresponds to one line in the chart. Because we are using Prometheus, a line receives it’s metrics by querying our Prometheus instance. Each line is configured separately, so you can perform a different query with different labels for each line. This means you can combine different tools in a single graph.

Grafana also provides several other features, such as:

- Real time update.

- More variety of charts (Line graph, bar graph, heatmaps, logs, etc.).

- The ability to save dashboard to be revisited at later time.

- Permission managements.

- Plugins to expand and customize your dashboards.

Prometheus and Grafana are two tools that complement each other. Using both of them can make your testing and monitoring tasks easier. This article aims at demonstrating one way of using them.